Your F1 score imbalanced data images are available in this site. F1 score imbalanced data are a topic that is being searched for and liked by netizens now. You can Get the F1 score imbalanced data files here. Download all free photos and vectors.

If you’re searching for f1 score imbalanced data pictures information related to the f1 score imbalanced data interest, you have pay a visit to the right blog. Our website frequently provides you with suggestions for seeing the highest quality video and picture content, please kindly hunt and locate more enlightening video articles and graphics that fit your interests.

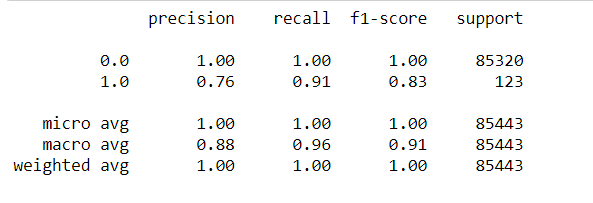

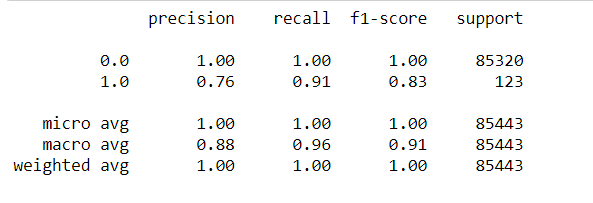

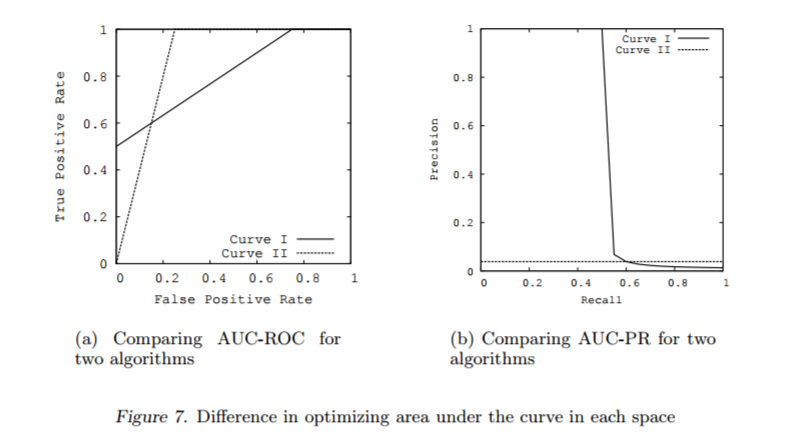

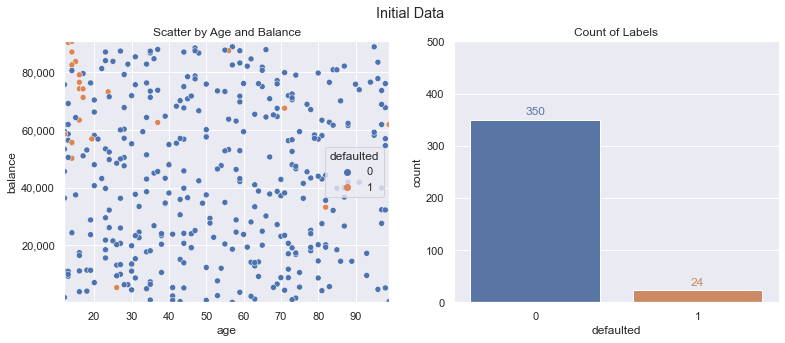

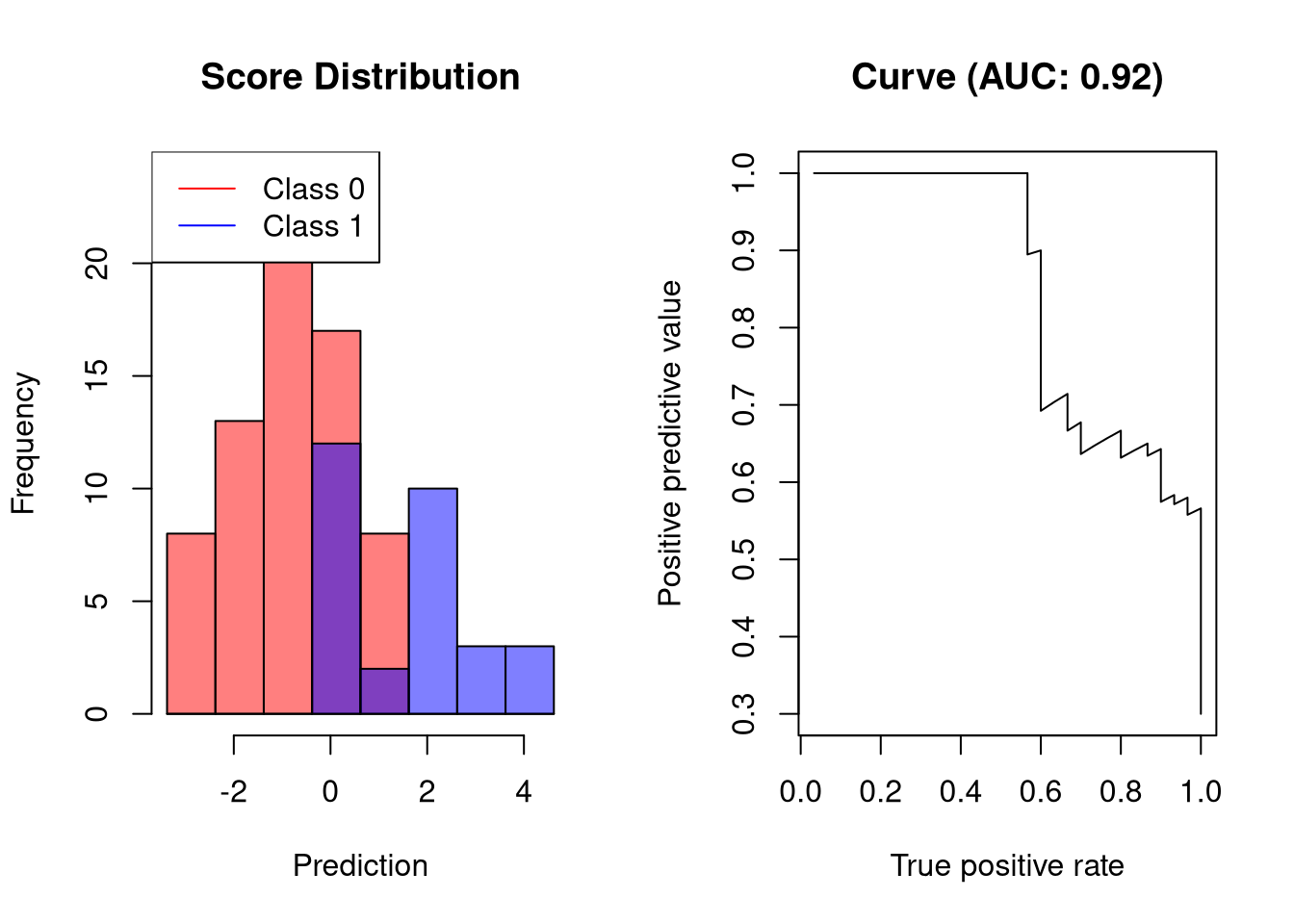

F1 Score Imbalanced Data. For a given class the different combinations of recall and precision have the following meanings. If the F1-score is the figure of merit I would suggest you try to tune the class weights. This is my code so far. F1-score can be interpreted as a weighted average or harmonic mean of precision and recall where the relative contribution of precision and recall to the F1-score are equal.

Classification On Imbalanced Data Using Scikit Learn Important Gaps To Avoid By Sundar Rengarajan Medium From medium.com

Classification On Imbalanced Data Using Scikit Learn Important Gaps To Avoid By Sundar Rengarajan Medium From medium.com

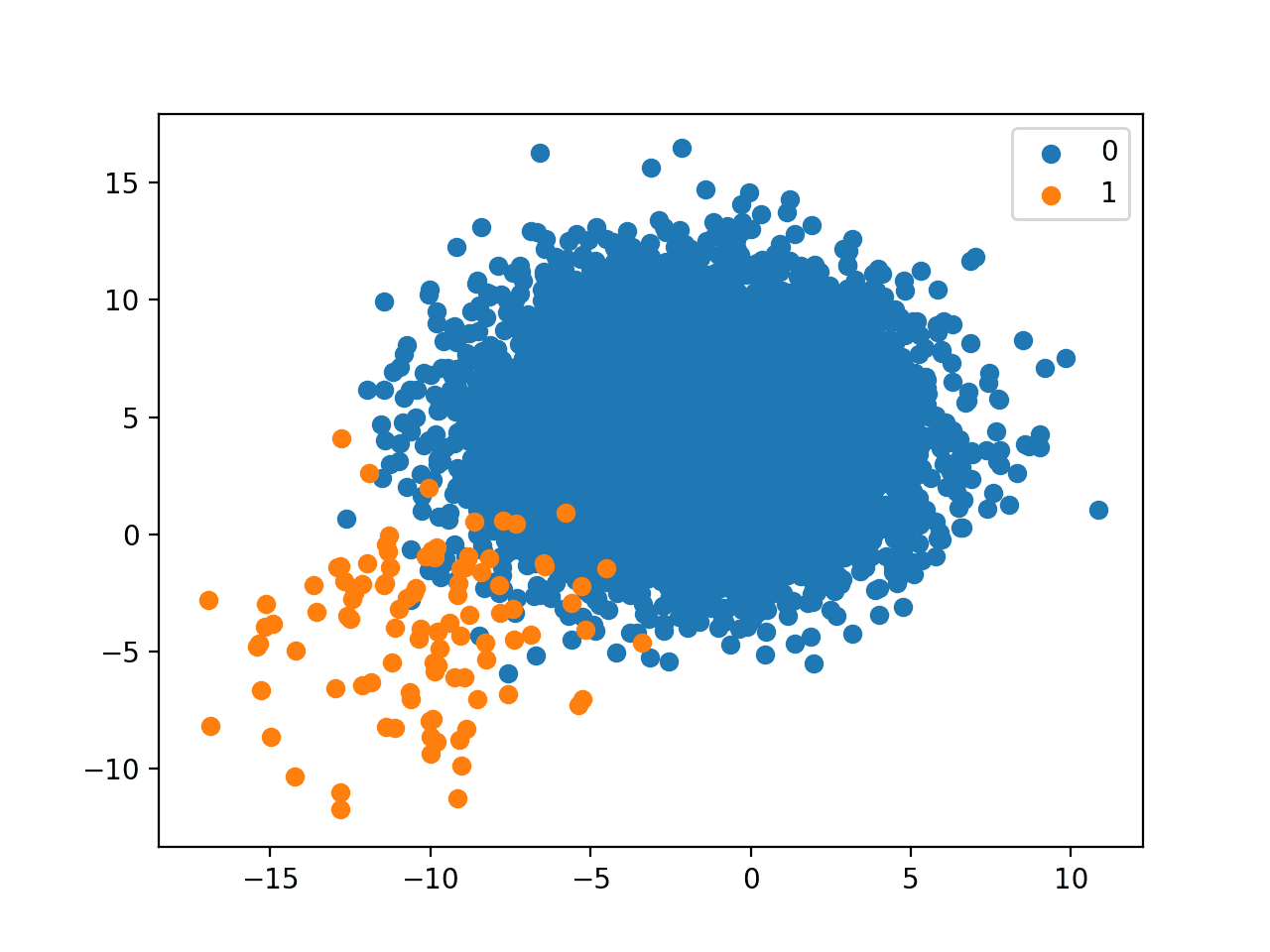

It should be pretty easy since you have a binary classification problem. You have an imbalanced data you have much more of the 0s samples than of 1s. I am not sure if that is what you are looking for but since the data from which you want to get a performance metric from is imbalanced you could try to apply weighted measurements such as a weighted f1-score. Heres a little example. F1-score reaches its best value at 1 and worst score at 0. For a given class the different combinations of recall and precision have the following meanings.

F1-score can be interpreted as a weighted average or harmonic mean of precision and recall where the relative contribution of precision and recall to the F1-score are equal.

The F1 score becomes especially valuable when working on classification models in which your data set is imbalanced. The majority class will dominate algorithmic predictions without any correction for imbalance. I dont know if I did it correctly or not. This is my code so far. You have an imbalanced data you have much more of the 0s samples than of 1s. You have seen that the F1 score combines precision and recall into a single metric.

Source: researchgate.net

Source: researchgate.net

Maximum_epochs 40 early_stop_epochs 60. In this article the F1 score has been shown as a model performance metric. F1 2 PRE REC PRE REC What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely useful in most scenarios when we are working with imbalanced datasets ie. For a given class the different combinations of recall and precision have the following meanings. An imbalanced dataset with a 9010 split.

Source: stats.stackexchange.com

Source: stats.stackexchange.com

If the F1-score is the figure of merit I would suggest you try to tune the class weights. False positives will be much larger than false negatives. It should be pretty easy since you have a binary classification problem. 12 F1 score rules them all Therefore when having imbalanced dataset you should be looking more on other metrics for example F1 score. What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely.

Source: pinterest.com

Source: pinterest.com

I dont know if I did it correctly or not. You have an imbalanced data you have much more of the 0s samples than of 1s. F1-score can be interpreted as a weighted average or harmonic mean of precision and recall where the relative contribution of precision and recall to the F1-score are equal. False positives will be much larger than false negatives. An imbalanced dataset with a 9010 split.

Source: researchgate.net

Source: researchgate.net

In this article the F1 score has been shown as a model performance metric. From scikit-learn the f1-score features a weighted option which considers the number of instances per label. The F1 score of a class is given by the harmonic mean of precision and recall 2precisionrecall precision recall it combines precision and recall of a class in one metric. F1 2 PRE REC PRE REC What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely useful in most scenarios when we are working with imbalanced datasets ie. 12 F1 score rules them all Therefore when having imbalanced dataset you should be looking more on other metrics for example F1 score.

Source: stats.stackexchange.com

Source: stats.stackexchange.com

An imbalanced dataset with a 9010 split. F1 is a suitable measure of models tested with imbalance datasets. You have an imbalanced data you have much more of the 0s samples than of 1s. F1-score reaches its best value at 1 and worst score at 0. 12 F1 score rules them all Therefore when having imbalanced dataset you should be looking more on other metrics for example F1 score.

Source: medium.com

Source: medium.com

Each learner you have applied have its own trick for it. There are multiple way to deal with imbalanced data. You have seen that the F1 score combines precision and recall into a single metric. An imbalanced dataset with a 9010 split. But I think F1 is mostly a measure for models rather than datasets.

Source: dpmartin42.github.io

Source: dpmartin42.github.io

An imbalanced dataset with a 9010 split. Im trying to use f1 score because my dataset is imbalanced. This makes it easy to use in grid search or automated optimization. You have seen that the F1 score combines precision and recall into a single metric. Heres a little example.

Source: towardsdatascience.com

Source: towardsdatascience.com

What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely useful in most scenarios when we are working with imbalanced datasets ie a dataset with a non-uniform distribution of class labels. F1-score can be interpreted as a weighted average or harmonic mean of precision and recall where the relative contribution of precision and recall to the F1-score are equal. The F1 score becomes especially valuable when working on classification models in which your data set is imbalanced. Variance in the minority set will be larger due to fewer data points. What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely.

Source: machinelearningmastery.com

Source: machinelearningmastery.com

1 Clearly the fact that you a relatively small number of True 1s samples in you datasets affects the performance of your classifier. 0 1 and 2. Variance in the minority set will be larger due to fewer data points. But I think F1 is mostly a measure for models rather than datasets. I dont know if I did it correctly or not.

Source: datascience.stackexchange.com

Source: datascience.stackexchange.com

This way you can get an averaged. If the F1-score is the figure of merit I would suggest you try to tune the class weights. 0 1 and 2. You could not say that dataset A is better than dataset B. The F1 score becomes especially valuable when working on classification models in which your data set is imbalanced.

Source: researchgate.net

Source: researchgate.net

Each learner you have applied have its own trick for it. If the F1-score is the figure of merit I would suggest you try to tune the class weights. From scikit-learn the f1-score features a weighted option which considers the number of instances per label. For a given class the different combinations of recall and precision have the following meanings. Share Improve this answer answered Jul 16 19 at 115 clement116 33 7 Add a comment 1.

Source: stats.stackexchange.com

Source: stats.stackexchange.com

There are multiple way to deal with imbalanced data. This way you can get an averaged. If the F1-score is the figure of merit I would suggest you try to tune the class weights. F1 score has nothing to do with Lewis Hamilton or Michael Schumacher it is weighted average of the precision and recall. I dont know if I did it correctly or not.

Source: datascienceblog.net

Source: datascienceblog.net

This makes it easy to use in grid search or automated optimization. Each learner you have applied have its own trick for it. This is my code so far. You can feed class_weight a dictionary with the weights for each class. Share Improve this answer answered Jul 16 19 at 115 clement116 33 7 Add a comment 1.

Source: researchgate.net

Source: researchgate.net

This way you can get an averaged. In this article the F1 score has been shown as a model performance metric. Im trying to use f1 score because my dataset is imbalanced. You can feed class_weight a dictionary with the weights for each class. The F1 score becomes especially valuable when working on classification models in which your data set is imbalanced.

Source: peltarion.com

Source: peltarion.com

If the F1-score is the figure of merit I would suggest you try to tune the class weights. F1-score reaches its best value at 1 and worst score at 0. There are multiple way to deal with imbalanced data. What we are trying to achieve with the F1-score metric is to find an equal balance between precision and recall which is extremely useful in most scenarios when we are working with imbalanced datasets ie a dataset with a non-uniform distribution of class labels. The majority class will dominate algorithmic predictions without any correction for imbalance.

Source: mathworks.com

Source: mathworks.com

I dont know if I did it correctly or not. The F1 score becomes especially valuable when working on classification models in which your data set is imbalanced. Why is F1 good for imbalanced datasets. From scikit-learn the f1-score features a weighted option which considers the number of instances per label. If the F1-score is the figure of merit I would suggest you try to tune the class weights.

Source: machinecurve.com

Source: machinecurve.com

There is no better or worse here. From scikit-learn the f1-score features a weighted option which considers the number of instances per label. F1 score has nothing to do with Lewis Hamilton or Michael Schumacher it is weighted average of the precision and recall. If the F1-score is the figure of merit I would suggest you try to tune the class weights. Variance in the minority set will be larger due to fewer data points.

Source: in.pinterest.com

Source: in.pinterest.com

It should be pretty easy since you have a binary classification problem. An imbalanced dataset with a 9010 split. For a given class the different combinations of recall and precision have the following meanings. 12 F1 score rules them all Therefore when having imbalanced dataset you should be looking more on other metrics for example F1 score. Variance in the minority set will be larger due to fewer data points.

This site is an open community for users to submit their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site adventageous, please support us by sharing this posts to your own social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title f1 score imbalanced data by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.